Hyperscale computing – load balancing for large quantities of data

Big data and cloud computing are all the rage. Industry 4.0, the Internet of Things and self-driving cars have become topics of everyday conversation. All these technologies rely on networking to link together large numbers of sensors, devices, and computers. Huge volumes of data are generated and all of this data has to be processed in real-time, and instantly converted into actions. Whether in the industrial sector or private homes, in science or research, data volumes are expanding exponentially. Every minute, around 220,000 Instagram posts, 280,000 tweets, and 205 million emails are sent or uploaded online.

However, data volumes fluctuate, so it isn’t always possible to know how much server capacity will be required, or when. This is why server infrastructures have to be scalable. In this guide to hyperscale computing we’re going to look at what physical structures are used to achieve this and how they can best be linked together. This will help you decide what type of server solution is best suited to your needs.

- Cost-effective vCPUs and powerful dedicated cores

- Flexibility with no minimum contract

- 24/7 expert support included

What is hyperscale?

The term “hyperscale” is used in the world of computing to describe a certain type of server setup.

The term “hyperscale” refers to scalable cloud computing systems in which a very large number of servers are networked together. The number of servers used at any one time can increase or decrease to respond to changing requirements. This means the network can efficiently handle both large and small volumes of data traffic.

If a network is described as “scalable”, it can adapt to changing performance requirements. Hyperscale servers are small, simple systems that are designed for a specific purpose. To achieve scalability, the servers are networked together “horizontally”. This means that to increase the computing power of an IT system, additional server capacity is added. Horizontal scaling is also referred to as “scaling out”.

The alternative solution – vertical scaling or scaling up – involves upgrading an existing local system, for example, by using better hardware: more RAM, a faster CPU or graphics card, more powerful hard drives, etc. In practice, vertical scaling usually comes first. In other words, local systems are upgraded as far as is technically feasible, or as much as the hardware budget permits, at which point the only remaining solution is generally hyperscale.

How does hyperscale work?

In hyperscale computing, simple servers are networked together horizontally. “Simple” here doesn’t mean primitive; it means the servers are easy to network together. Only a few basic conventions (e.g. network protocols) are used. This makes it easier to manage communication between the different servers.

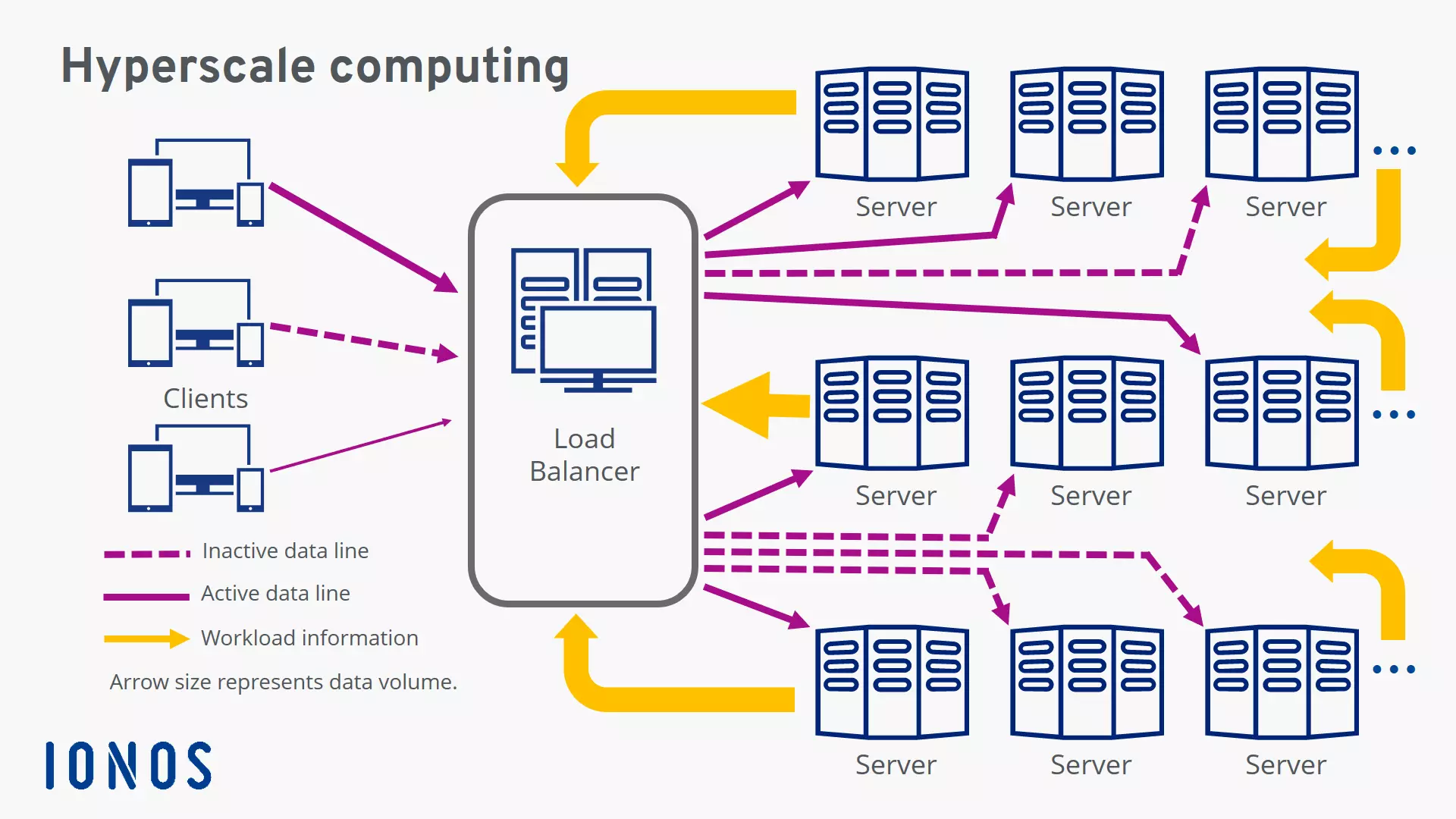

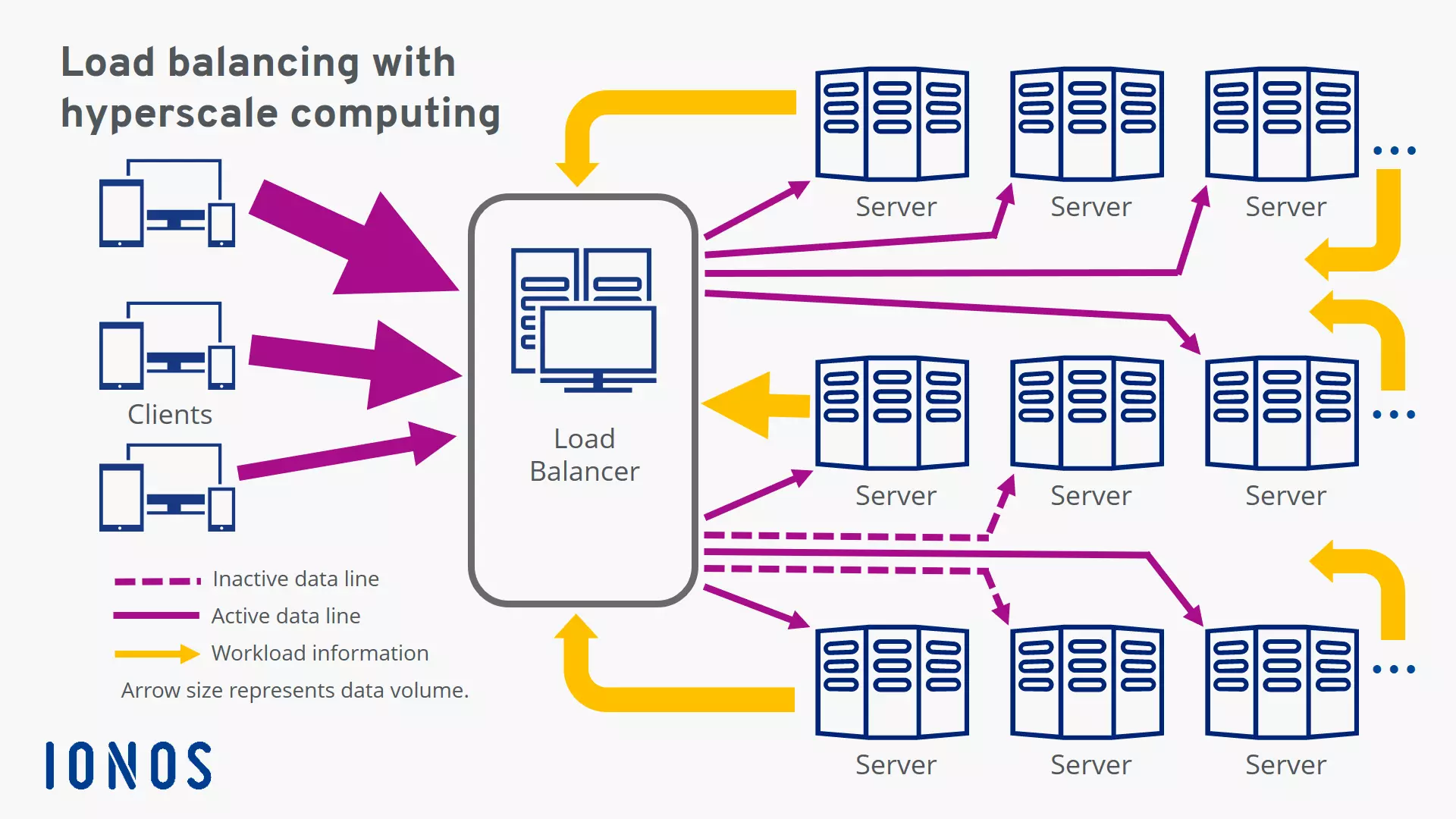

The network includes a computer known as a “load balancer”. The load balancer is responsible for managing incoming requests and routing them to the servers that have the most capacity. It continuously monitors the load on each server and the amount of data that needs processing and switches servers on or off accordingly.

Analyses show that companies only actively use 25 to 30 percent of their data. The remaining percentage is accounted for by backup copies, customer data, recovery data, etc. Without a carefully organized system, data can be hard to find and it can sometimes take days to make backups. Hyperscale computing simplifies all of this, because there is only one point of contact between all of the hardware required for computing, storage, and networks and the data backups, operating systems, and other necessary software. By combining hardware with supporting systems, companies can expand their current computing environment to several thousand servers.

This limits the need to keep copying data and makes it easier for companies to implement guidelines and security checks, which in turn reduces personnel and admin costs.

Advantages and disadvantages of hyperscale computing

Being able to quickly increase or decrease server capacity has both advantages and disadvantages:

Advantages

- There are no limits to scaling, so companies have the flexibility to expand their system whenever they need to. This means they can adapt quickly and cost-effectively to changes in the market.

- Companies have to define long-term strategies for developing their IT networks.

- Hyperscale computing providers use the principle of redundancy to guarantee a high level of reliability.

- Companies can avoid becoming dependent on a single provider by using several different providers simultaneously.

- Costs are predictable and the solution is cost-efficient, which helps companies achieve their objectives.

Disadvantages

- Companies have less control over their data.

- Adding new memory/server capacity can introduce errors.

- Requirements in terms of internal management and employee responsibility are greater, although this is an advantage in the long term.

- Users become dependent on the pricing structure of their hyperscale provider.

- Each provider has its own user interface.

As a way of balancing the pros and cons, companies can choose a hybrid solution and only use the cloud to store particularly large backups, or data they don’t need very often. This frees up storage space in their in-house data centre. Examples include personal data about users of an online shopping site that must be disclosed to individual users and deleted upon request, or data that a company has to keep to meet legal requirements.

What is a hyperscaler?

A hyperscaler is the operator of a data centre that offers scalable cloud computing services. The first company to enter this market was Amazon in 2006, with Amazon Web Services (AWS). AWS is a subsidiary of Amazon that helps to optimise use of Amazon’s data centres around the world. AWS also offers an extensive range of specific services. It holds a market share of around 40%. The other two big players in this market are Microsoft, with its Azure service (2010), and the Google Cloud Platform (2010). IBM is also a major provider of hyperscale computing solutions. All of these companies also work with approved partners to offer their technical services via local data centres in specific countries. This is an important consideration for many companies, especially since the General Data Protection Regulation came into force.

The IONOS Cloud is a good alternative to large US hyperscalers. The solution’s main focus is on Infrastructure as a Service (IaaS), and it includes offers for Compute Engine, Managed Kubernetes, S3 Object Storage or a Private Cloud.