What are AI tokens? Definition, functionality and calculation.

AI tokens represent the smallest linguistic unit that AI models need to process and interpret text. With the help of AI tokenization, language is broken down into these building blocks, which form the basis for the analysis and generation of texts. With tools such as the OpenAI Tokenizer, the text tokens can be determined quickly and easily.

What are AI tokens?

AI tokens (artificial intelligence tokens) are the smallest data units of AI models such as ChatGPT, LLama2 and Copilot. They are the most important building block for the processing, interpretation and generation of text, because only by breaking down a text into tokens can artificial intelligence understand language and provide suitable answers to users’ queries.

How many AI tokens a text is made up of depends on various factors. In addition to the text length, the language used, and the AI model are also important. If you use an API access such as the ChatGPT API, the number of tokens also determines which costs are incurred. In most cases, AI applications charge for the AI tokens used individually.

- Get online faster with AI tools

- Fast-track growth with AI marketing

- Save time, maximise results

How does AI tokenization work?

The process by which an AI model converts text into tokens is called AI tokenization. This step is necessary because large language models require natural language in a machine-analysable form. Tokenization therefore forms the basis for text interpretation, pattern recognition and response generation. Without this conversion process, artificial intelligence wouldn’t be able to grasp meaning and relationships. The conversion of text into tokens consists of several steps and works as follows:

- Normalisation: In the first step, the AI model converts the text into a standardised form, which reduces complexity and variance. In the course of normalisation, the entire text is converted into lower-case letters. The model also removes special characters and sometimes restricts words to basic forms.

- Text decomposition into tokens: Next, the AI breaks down the text into tokens, i.e., smaller linguistic units. How the text modules are broken down depends on the complexity and training method of the model. The sentence ‘AI is revolutionising market research’. consisted of eleven tokens in GPT-3, nine tokens in GPT-3.5 and GPT-4 and only eight tokens in GPT-4o.

- Assignment of numerical values: Subsequently, the AI model assigns each AI token a numerical value called a token ID. The IDs are, in a sense, the vocabulary of artificial intelligence, which contains all the tokens known to the model.

- Processing of the AI tokens: The language model analyses the relationship between the tokens in order to recognise patterns and create predictions or answers. These are generated on the basis of probabilities. The AI model looks at contextual information and always determines subsequent AI tokens based on the previous ones.

- 100% GDPR-compliant and securely hosted in Europe

- One platform for the most powerful AI models

- No vendor lock-in with open source

How are the tokens of a text calculated?

How tokens are calculated by the AI can be understood with the help of tokenizers, which break down texts into the smallest processing units. They work according to specific algorithms that are based on the training data and the architecture of the AI model. In addition to displaying the number of tokens, such tools can also provide detailed information on each individual token, such as the associated numeric token ID. This not only makes it easier to calculate costs, but also to optimise the efficiency of texts when communicating with AI models.

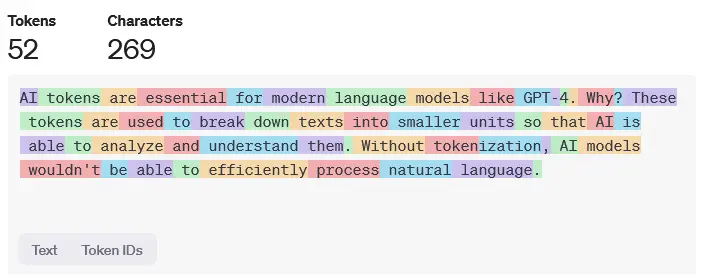

An example of a freely accessible tokenizer is the OpenAI Tokenizer, which is designed for current ChatGPT models. After you’ve copied or typed the desired text into the input field, the application presents the individual AI tokens to you by highlighting the units in colour.

The maximum text length always depends on the token limit of the respective model. GPT-4, for example, can process up to 32,768 tokens per request.

What are some practical examples of AI tokens and tokenization?

To get a better idea of AI tokenization, we’ve written a short sample text to illustrate it:

AI tokens are essential for modern language models such as GPT-4. Why? These tokens break down texts into smaller units so that the AI has the ability to analyse and understand them. Without tokenization, it would be impossible for AI models to process natural language efficiently.

The GPT-4o model breaks down this text consisting of 269 characters into 52 tokens, which looks as follows: