Apache Kafka

The operation of apps, web services and server applications, to name but a few, presents a variety of challenges for those running them. For example, one of the most common challenges is ensuring that data stream transmissions are unimpeded and that they are processed as quickly and efficiently as possible. The messaging and streaming application Apache Kafka is a piece of software that greatly simplifies this challenge. Originally developed as a message queuing service for LinkedIn, this open source solution now provides a comprehensive solution for data storage, transfer and processing.

What is Kafka?

Apache Kafka is a platform-independent open source application belonging to the Apache Software Foundation which focuses on data stream processing. The project was originally launched in 2011 by LinkedIn, the company behind the social network for professionals bearing the same name. The aim was to develop a message queue. Since its license-free launch (Apache 2.0), this software’s capabilities have been greatly extended, transforming what was a simple queuing application into a powerful streaming platform with a wide range of functions. It is used by well-known companies such as Netflix, Microsoft and Airbnb.

Founded by the original developers of Apache Kafka in 2014, Confluent delivers the most complete version of Apache Kafka with Confluent Platform. It extends the program with additional functions, some of which are also open source, while others are commercial.

What are Apache Kafka’s core functions?

Apache Kafka is primarily designed to optimise the transmission and processing of data streams transferred via a direct connection between the data receiver and data source. Kafka acts as a messaging instance between the sender and the receiver, providing solutions to the common challenges encountered with this type of connection.

For example, the Apache platform provides a solution to the inability to cache data or messages when the receiver is not available (e.g. due to network problems). In addition, a properly set-up Kafka queue prevents the sender from overloading the receiver. This type of situation always occurs when information is sent faster than it can be received and processed during a direct connection. Lastly, the Kafka software is also ideal for situations in which the target system receives the message but crashes during processing. While the sender would normally assume that processing has occurred despite the crash, Apache Kafka reports the failure to the sender.

Unlike pure message queuing services such as databases, Apache Kafka is fault tolerant. This means that the software satisfies requirements to continue processing messages and data. Combined with its high scalability and ability to distribute transmitted information across any number of systems (distributed transaction log), this makes Apache Kafka an excellent solution for all services which need to ensure that data is stored and processed quickly while maintaining high availability.

An overview of the Apache Kafka architecture

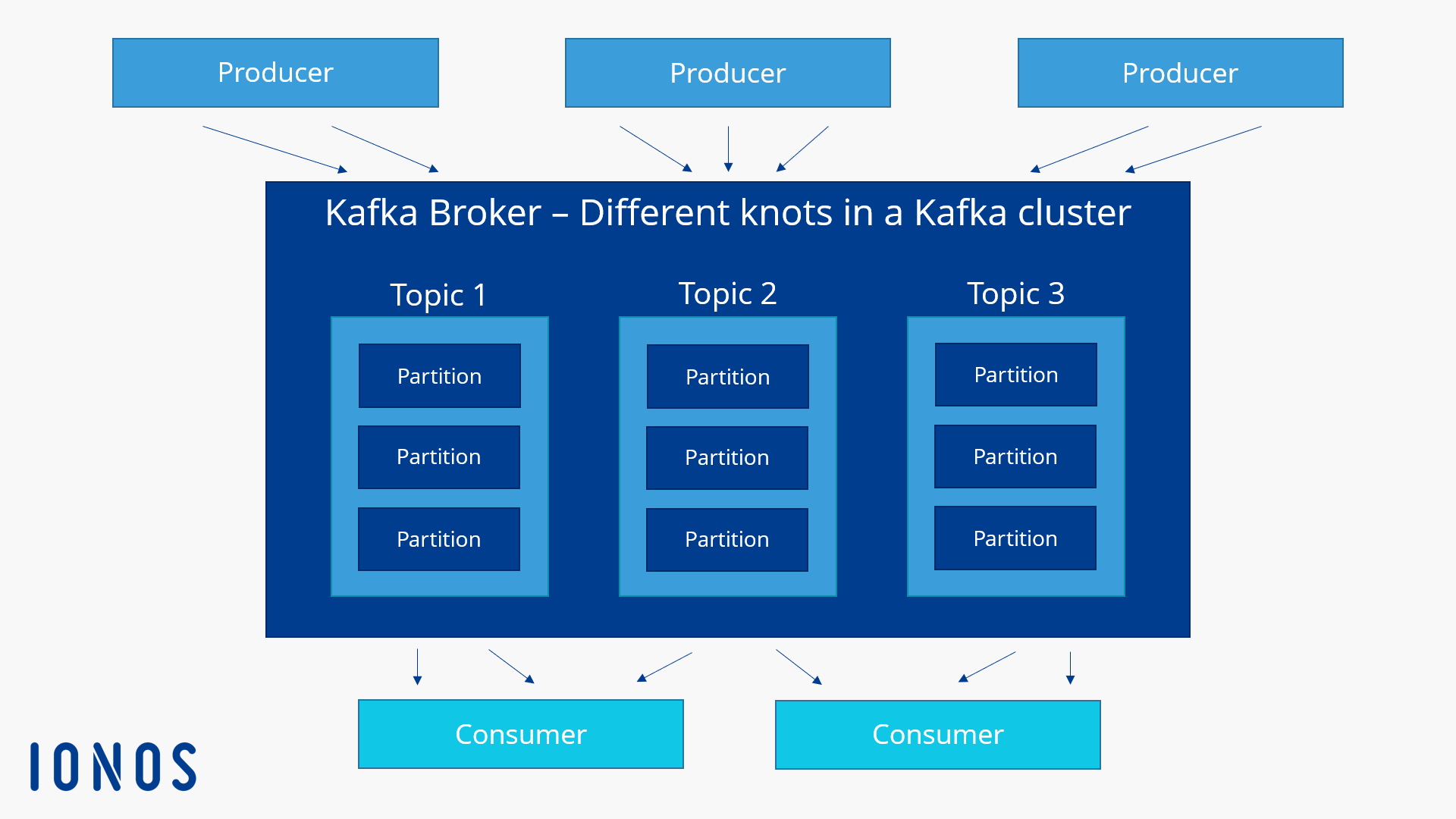

Apache Kafka runs as a cluster on one or more servers that can span multiple data centres. The individual servers in the cluster, known as brokers, store and categorise incoming data streams into topics. The data is divided into partitions, replicated and distributed within the cluster, and assigned a time stamp. As a result, the streaming platform ensures high availability and a fast read access time. Apache Kafka differentiates between normal topics and compacted topics. In normal topics, Kafka can delete messages as soon as the storage period or storage limit has been exceeded, whereas in compacted topics, they are not subject to time or space limitations.

Applications which write data in a Kafka cluster are called producers, while applications which read data from a Kafka cluster are called consumers. The central component accessed by producers and consumers when processing data streams is a Java library called Kafka Streams. By supporting transactional writing, this ensures that messages are only delivered once (with no duplicates). This is called exactly-once delivery.

The Kafka Streams Java library is the recommended standard solution for processing data in Kafka clusters. However, you can use Apache Kafka with other data stream processing systems as well.

The technical basics: Kafka’s interfaces

This software offers five different basic interfaces to give applications access to Apache Kafka:

- Kafka Producer: The Kafka Producer API allows applications to send data streams to the broker(s) in an Apache cluster in order to be categorised and stored (in the previously mentioned topics).

- Kafka Consumer: The Kafka Consumer API gives Apache Kafka consumers read access to data stored in the cluster’s topics.

- Kafka Streams: The Kafka Streams API allows an application to act as a stream processor to convert incoming data streams into outgoing data streams.

- Kafka Connect: The Kafka Connect API makes it possible to build reusable producers and consumers which connect Kafka topics with existing applications or database systems.

- Kafka AdminClient: The Kafka AdminClient API makes it possible to easily manage and inspect Kafka clusters.

Communication between client applications and individual servers in Apache clusters occurs via a simple, powerful and language-independent protocol based on TCP. The developers provide a Java client for Apache Kafka by default, but there are also clients in a variety of other languages such as PHP, Python, C/C++, Ruby, Perl and Go.

Use case scenarios for Apache Kafka

From the outset, Apache Kafka was designed for high read and write throughput. When combined with the previously mentioned APIs and its high flexibility, scalability and fault tolerance, this makes the open source software appealing for a variety of use cases. Apache Kafka is particularly well-suited for the following applications:

- Publishing and subscribing to data streams: This open source project started out by using Apache Kafka as a messaging system. Although the software’s functions have been extended, it is still best suited for direct message transmission via the queuing system as well as for broadcast message transmission.

- Processing data streams: Apache Kafka is a powerful tool for applications which need to react in real time to specific events and which need to process data streams as quickly and effectively as possible for this purpose.

- Storing data streams: Apache Kafka can also be used as a fault-tolerant, distributed storage system, whether it’s 50 kilobytes or 50 terabytes of consistent data that you need to store on the server(s).

Naturally, all these elements can be combined as desired, allowing Apache Kafka, as a complex streaming platform, to not only store data and make it available at any time, but also to process it in real time and link it to all desired applications and systems.

An overview of common use cases for Apache Kafka:

- Messaging system

- Web analytics

- Storage system

- Data stream processor

- Event sourcing

- Log file analysis and management

- Monitoring solutions

- Transaction log